Shane Chaffe

4 min read ⏳

How do we backup Contentful data using AWS?

This is something that has always sat in the forefront of my mind but has been a task I have never tackled until today. Contentful already offers a few packages that support us in getting data out of the platform, namely their export package which you can take a look at here.

One thing to be cautious of here is that the export package relies heavily on the Contentful Management API which adheres to its own rate limits so it is rather common to see in the logs a rate-limiting error as it makes more than 10 requests per second, fortunately, it auto-retries. That being said, you can fork the repository to configure this and stagger the requests if you would wish to do so.

Contentful Backup Best Practices

Having a regular backup is key to business continuity and security in the event of an emergency happening. How you configure this is completely up to you, for some organisations having a backup take place once a day is enough, for others, it may need to be every 5 minutes depending on how regularly you are updating and creating content and naturally the industry you are in.

Using the Contentful export package you can customise the data you receive, that being said here are some key points:

Do you really need to backup your Contentful model every time?

Do you require all assets on each backup?

If your back up is too large, consider splitting it up into manageable loads

A perfect way to sample this first is to use the export package locally before you plan on having this in some kind of pipeline or in AWS for example.

So, how do I backup?

Well, firstly you need to create a project that utilises a few packages. In this example, we'll be using JavaScript.

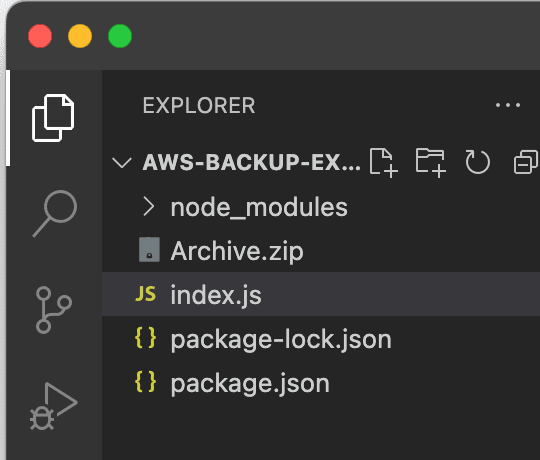

Go ahead and create the following file structure:

Once that is done, let's take a look at what we need in terms of packages and our script in index.js.

You can copy and paste this and run npm install in the terminal.

{

"name": "aws-backup-example",

"version": "1.0.0",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "Shane Chaffe",

"license": "ISC",

"description": "An example to backup to AWS",

"dependencies": {

"aws-sdk": "^2.1692.0",

"contentful-export": "^7.21.3",

"file-system": "^2.2.2"

}

}

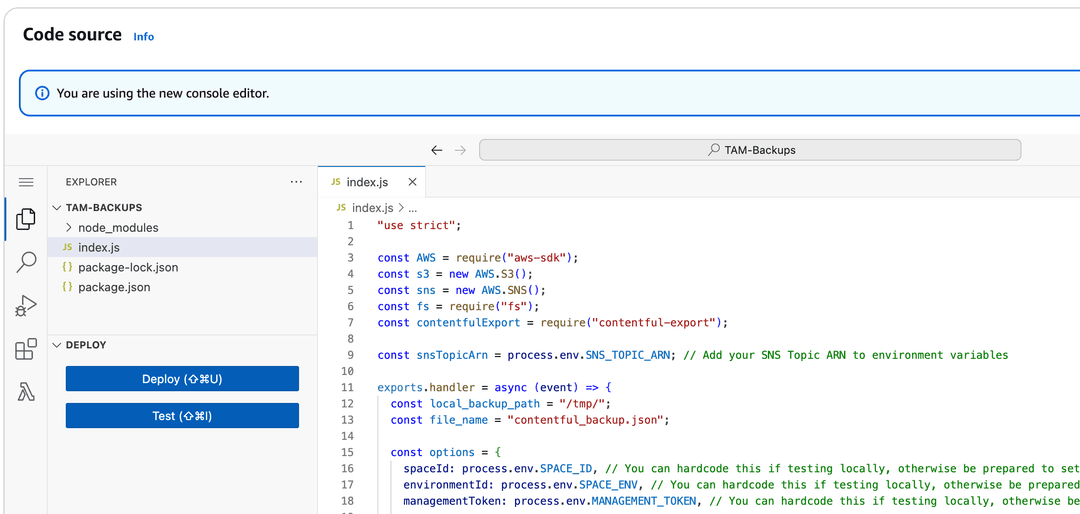

Now index.js:

"use strict";

const AWS = require("aws-sdk");

const s3 = new AWS.S3();

const sns = new AWS.SNS();

const fs = require("fs");

const contentfulExport = require("contentful-export");

const snsTopicArn = process.env.SNS_TOPIC_ARN; // Add your SNS Topic ARN to environment variables

exports.handler = async (event) => {

const local_backup_path = "/tmp/";

const file_name = "contentful_backup.json";

const options = {

spaceId: process.env.SPACE_ID, // You can hardcode this if testing locally, otherwise be prepared to set up variables in AWS and in a local env file

environmentId: process.env.SPACE_ENV, // You can hardcode this if testing locally, otherwise be prepared to set up variables in AWS and in a local env file

managementToken: process.env.MANAGEMENT_TOKEN, // You can hardcode this if testing locally, otherwise be prepared to set up variables in AWS and in a local env file

contentFile: file_name,

exportDir: local_backup_path,

useVerboseRenderer: false,

saveFile: true,

};

try {

const result = await contentfulExport(options);

console.log("Your space data has been downloaded!");

// Save file to AWS S3

console.log("Preparing file for AWS S3!");

const outputFile = local_backup_path + file_name;

let fileBuffer = new Buffer.from(fs.readFileSync(outputFile));

fs.unlinkSync(outputFile);

await uploadFile(fileBuffer);

console.log("Returning response:", outputFile);

// Notify success via SNS, this is linked to an email

await sendEmail("Backup Successful", `The backup file ${outputFile} was created and uploaded successfully.`);

return sendResponse(200, outputFile);

} catch (err) {

console.error("Oh no! Some errors occurred!", err);

// Notify failure via SNS, this is linked to an email

await sendEmail("Backup Failed", `The backup process failed with the following error: ${err.message}`);

return sendResponse(500, `Error: ${err.message}`);

}

};

const sendResponse = (status, body) => {

var response = {

statusCode: status,

body: body,

};

return response;

};

const uploadFile = async (buffer) => {

const now = new Date();

const formattedDate = `${now.getDate().toString().padStart(2, "0")}-${(

now.getMonth() + 1

)

.toString()

.padStart(2, "0")}-${now.getFullYear().toString().slice(-2)}`;

const params = {

Bucket: process.env.S3_BUCKET_NAME,

Key: `backups/cf-backup-${formattedDate}.json`,

Body: buffer,

};

console.log("Uploading file to S3 with params:", params);

try {

const result = await s3.putObject(params).promise();

console.log("File uploaded successfully:", result);

return result;

} catch (err) {

console.error("Error uploading file to S3:", err);

throw err;

}

};

const sendEmail = async (subject, message) => {

const params = {

TopicArn: snsTopicArn,

Subject: subject,

Message: message,

};

try {

const result = await sns.publish(params).promise();

console.log(`Email sent successfully: ${JSON.stringify(result)}`);

return result;

} catch (err) {

console.error("Failed to send email notification:", err);

throw err;

}

};

Feel free to read through the code to make sure you understand it but if you'd like a TLDR, here it is:

We're using the AWS SDK with the Contentful export SDK to manage our backups. We make our requests to Contentful to export the data and then sync it up with Amazon S3 to store the data in a bucket.

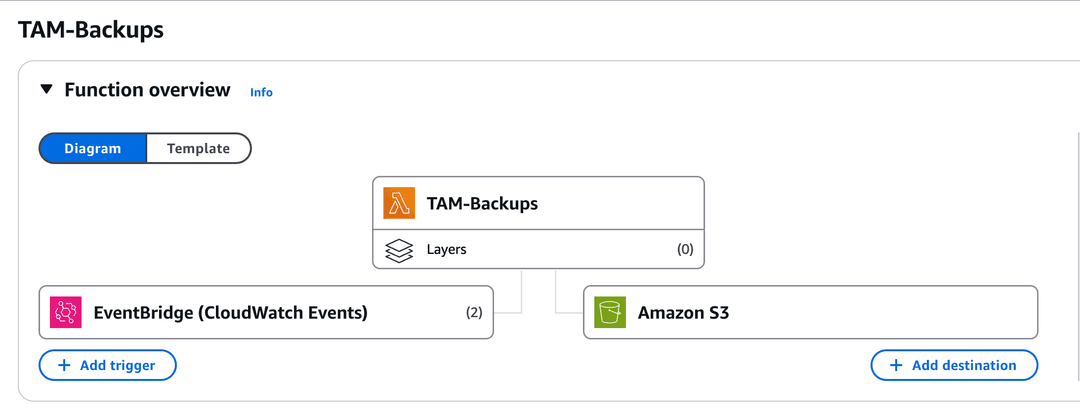

Let's take a look inside of AWS. Navigate to Lambda and if you haven't already, create a new function and name it as you want.

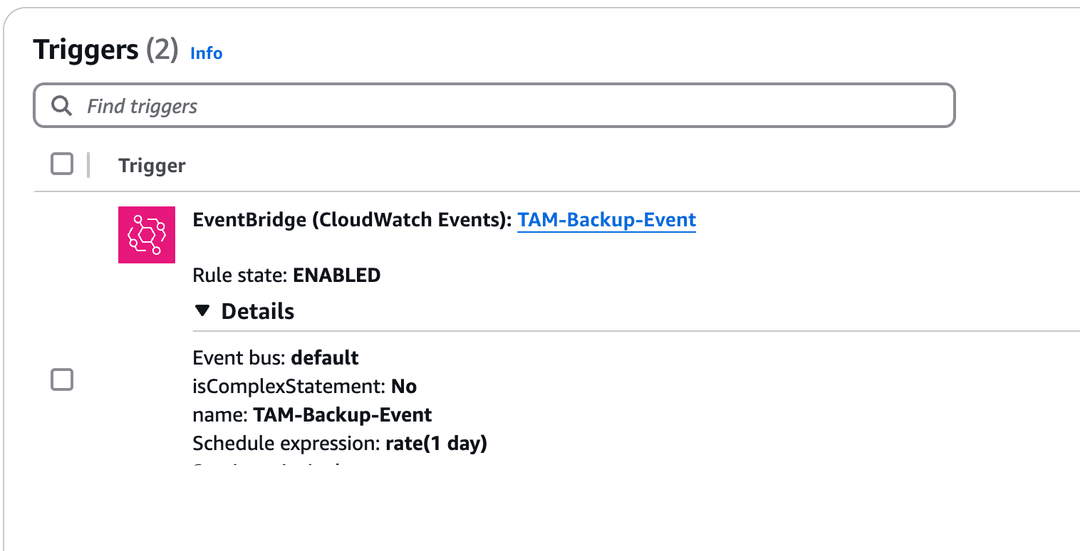

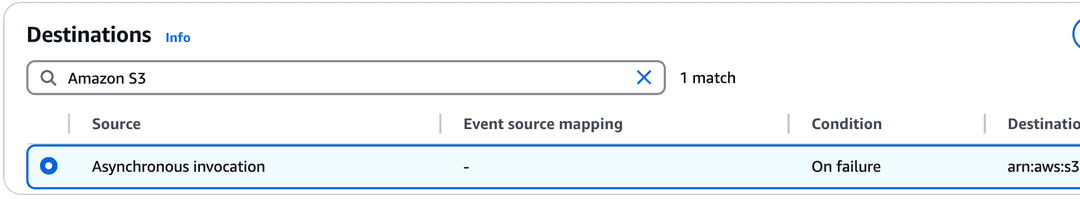

You'll notice that our destination is indeed S3 and we're using EventBridge to handle the scheduling. Go ahead and also create a bucket, be sure to note the ARN as you'll need it eventually.

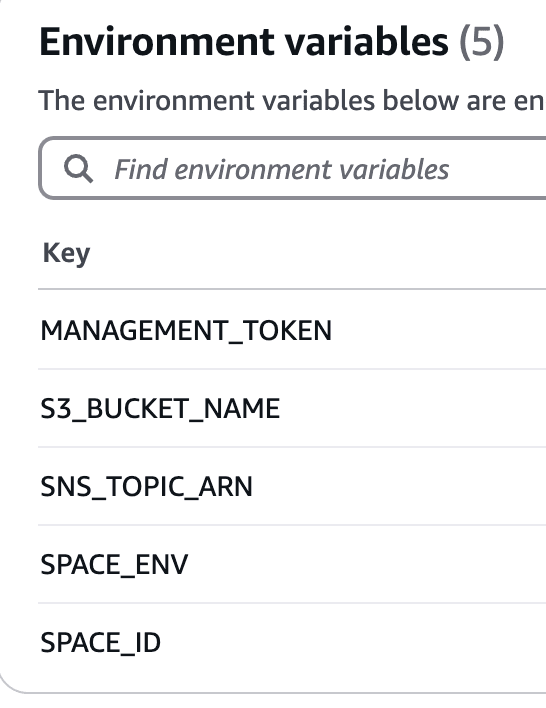

Before we continue in the function, configure your environment variables in the configuration tab:

You'll need to do the above to ensure we have some successful runs, if you hard-coded these values locally make sure you update the code appropriately as nobody likes an exposed key.

Go back to the code tab select the upload from option and select zip, at this point, you should zip your projet locally and upload it to have an identical copy.

In the configuration, I also recommend changing the timeout to a higher value than 3 seconds as the backup will take longer subject to your data size.

Now head over to CloudWatch Events and configure a job to run as you need it, in my example I am only doing this per day so the schedule value is: rate(1 day)

Then add the destination as your created S3 bucket, you will need to retrieve the ARN from your bucket.

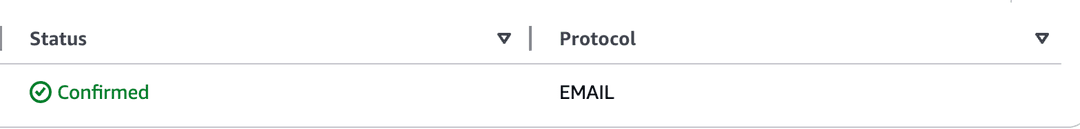

Now, at a code level, we are using SNS to trigger emails so you'll also need to create a topic in here and verify the email(s) you wish to alert in the event of success or failure. Make sure your endpoint is what you expect it to be and you have access as you'll need to verify this with an email link.

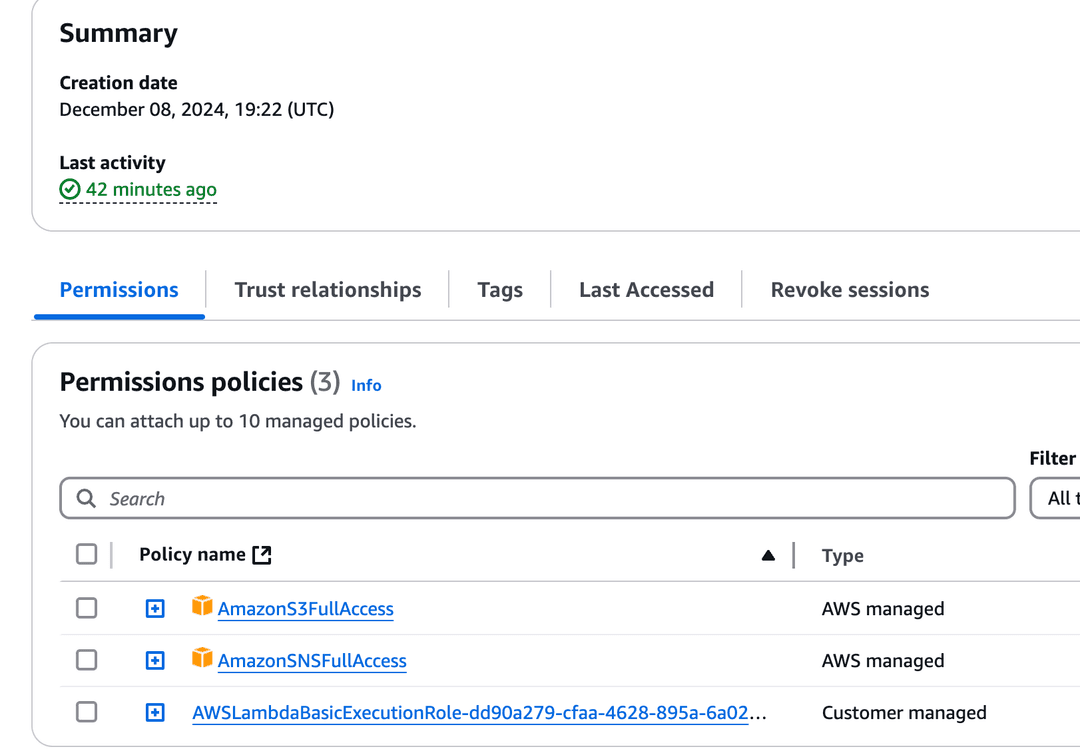

Now thanks to the written code, when you test it should send an email to the associated user. However, now we have all of this setup you need to ensure you have the correct IAM permissions. I created a simple role to execute the required functionality using this configuration:

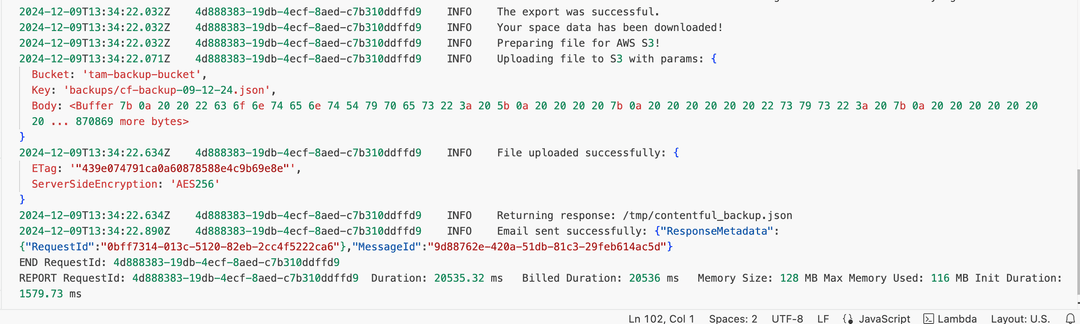

So at this point, we have automatic backups configured as well as alerting. Let's test it and then add an additional layer of alerts with CloudWatch. Back in the Lambda function code tab we can execute a test against our function, go ahead and test it out and you should hopefully see something that looks like this:

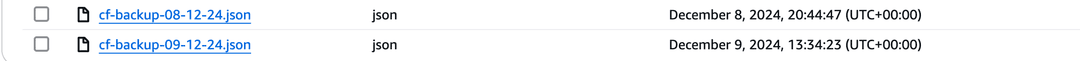

Now we can verify this in our S3 bucket as well by going there to see if a file was created:

Looks like our upload was successful so our automation is now complete. Feel free to configure the additional reporting in CloudWatch with a tolerance of 0 to ensure you are alerted on any failure or configure as you deem necessary.

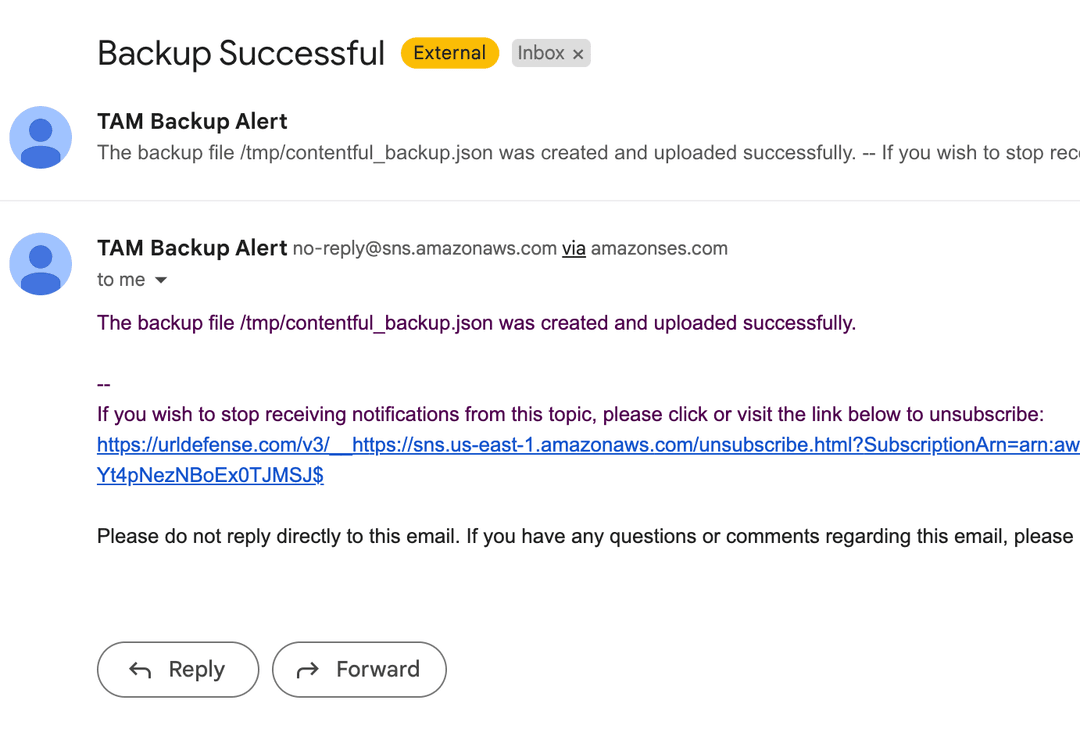

When I also check my email, I can now see an email is present stating my backup was indeed successful which reassures me this is working as expected and will run on the cadence I have set to ensure my data is safe.

As a note, if you're using the CLI tool you can make use of this flag: --max-allowed-limit 100

Error: 400 - Bad Request - Response size too big.

Contentful response sizes are limited (find more info in our technical limit docs). In order to resolve this issue, limit the amount of entities received within a single request by setting the maxAllowedLimit option:

contentfulExport({

proxy: 'https://cat:dog@example.com:1234',

rawProxy: true,

maxAllowedLimit: 50

...

})Technology used: